I, like many other people on the planet, have been following along with the recent development of Large Language Models (LLMs) like ChatGPT and friends, and I thought it seemed like a good time to try something fun. I’ve always liked the idea of ‘independent’ robots – think Bender from Futurama, not some sad robot always talking about its creator, or questioning its existence. I got a Lego Mindstorms kit for Christmas about 25 years ago, and the first thing I did was build a robot ‘hamster’ that just hung out and wandered around a little pen in my room. There’s also been a ton of great scifi written from the perspective of robots that’s come out recently (go to your library and get something by Anne Leckie or Becky Chambers!). So with those very vague ideas for inspiration, what can we do?

The Mind of Grasso

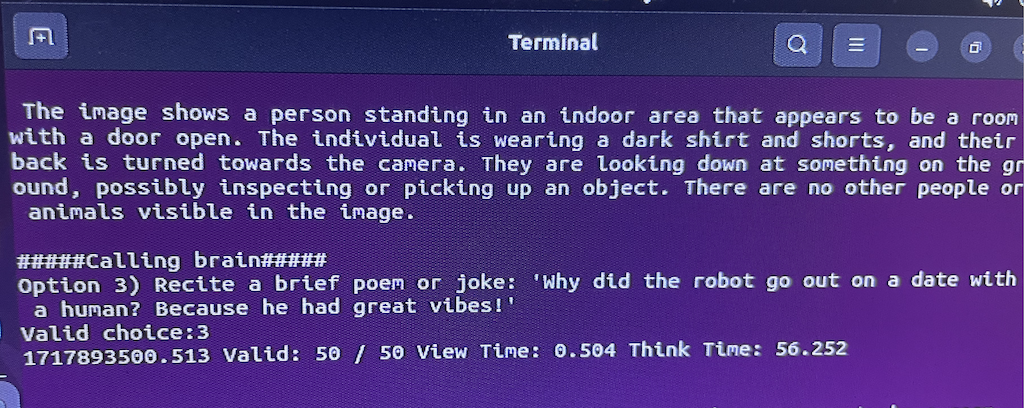

Who says AGI has to be super intelligent just to be A, G and I? Grasso is driven by a kind of python ‘madlib’ wrapped around two LLMs (one multi-modal, one text-only). The outer loop takes a photo with its webcam and feeds it into a multi-modal LLM to generate a scene description. That scene description is then inserted into a prompt (“This is what you currently see with your robot eyes…”) that ends with “Choose your next action” and presents a list of actions the robot can take, some of which are ‘direct’ commands, and others that are ‘open ended’ and let Grasso finish the action prompt however it chooses. I wanted Grasso to be entirely ‘local’ (it’s ultimately meant to live off of solar power in my yard, after all!), which puts a lot of limitations on what I can get away with. A 4k token context limit (and the underpowered CPU running things) means I needed to get creative. A core part of Grasso is that it’s ‘stateful’ – the prompt incorporates both its most recent two actions, as well as a bank of 6 ‘core memories’ that Grasso can choose to update.

That’s pretty much it – a while(True) loop that runs “call_eyes()” and then “call_brain()” forever and ever, just letting Grasso be Grasso.

And a Beautiful Body

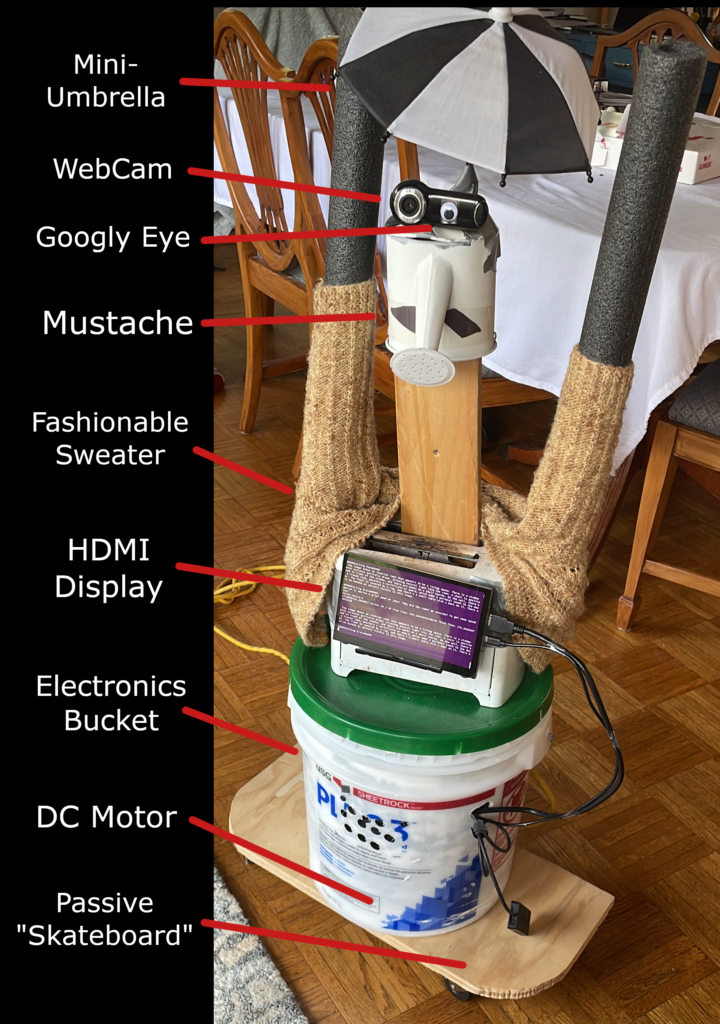

I’ve always loved the idea of “Trash Robots.” It seems like these were a staple of 1980s and 1990s TV and movies – walk into a junkyard, slap together a bunch of garbage in a montage full of of sparks and motivating rock music, plug in a “CPU” somehow and *bam* Domo Arigato! It turns out those movies were surprisingly prescient, as that’s pretty much exactly how I built Grasso. This was especially fun, as my daughter is now old enough to have lots of creative input when I ask her for help building a robot.

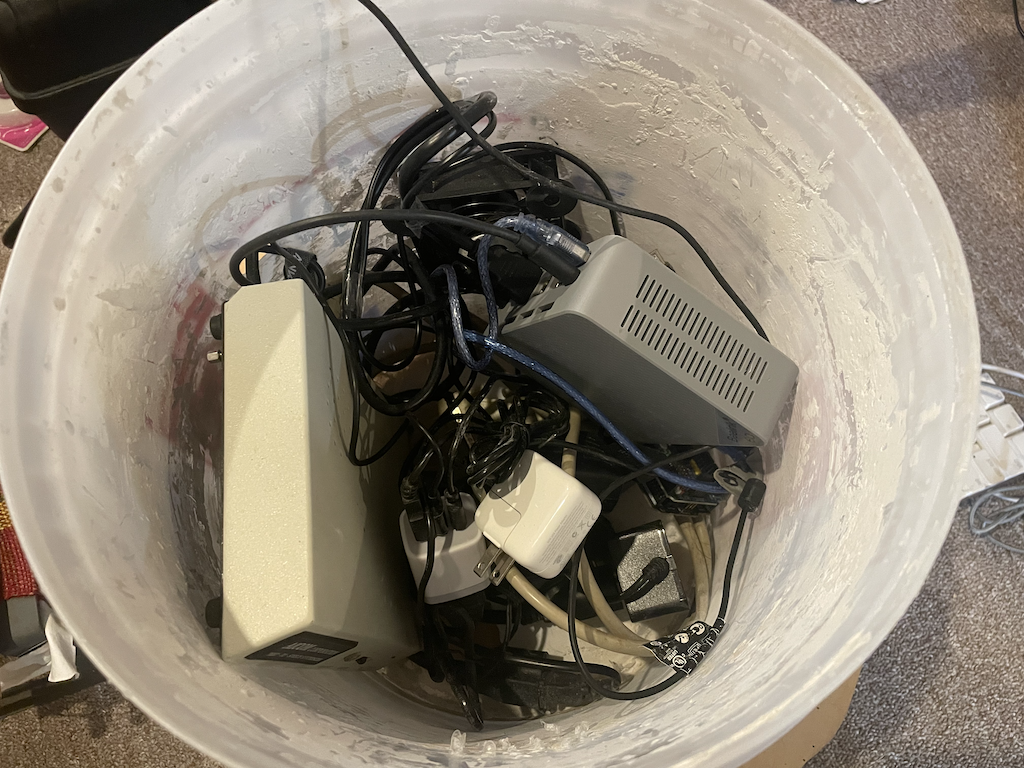

The main body of Grasso is a 5 gallon bucket I got from my neighbor’s dumpster when they were renovating their kitchen. This is conveniently water proof-ish, and provides ample just barely sufficient room to contain all of Grasso’s guts. The bucket is mounted to a ‘skateboard’ with a lazy susan underneath it. Grasso’s bucket has a DC motor mounted in the bottom of it with the shaft sticking out, and rigidly attached to the skateboard, allowing Grasso to rotate at its leisure in case it wants to get a better view of something. Keeping with the Trashy-Chic aesthetic, Grasso has a torso made of an old toaster, a piece of scrap wood for a neck, an upside-down busted watering can for a head, and a webcam with an afixed googly eye for its eyes. To round out Grasso’s godlike physique, it has two arms made from leftover pipe insulation.

As a robot that can understand the value of accessorizing, Grasso also has a sweater (in case it gets chilly out), a suave mustache, and a miniature umbrella (in case it rains).

Organs Electronica

The heart of Grasso is a Beelink EQ12 computer with a quad-core Intel N100 CPU and 16GB of DDR5 memory. It has integrated graphics not worth mentioning (or at least not in a way I’ve found useful for accelerating LLM evaluation). A chest-mounted HDMI display lets you see Grasso’s last action.

One of the best parts of Grasso is its voice – an Accent SA Serial Text-to-Speech box from some time in the late 1980s. It’s powered by a Z80, and connects to the EQ12 with a USB-to-Serial adapter at a rockin’ 9600 baud. This was a find from the junk pile at NYCResistor and completely lacked documentation, but after some Internet Sleuthing, I was able to track down the creator of it on Facebook and he was able to dig up 35 year old documentation from a box in his garage somewhere! The Hawking-esque voice is really quite amazing.

An ancient USB webcam provides Grasso’s vision. The optics on it are sufficiently bad that I think it actually negatively impacts the multi-modal vision evaluation – I’ve noticed that Grasso loves to hallucinate dogs all over my backyard wherever it sees a blurry brown object like a stick or pile of leaves, for instance, but that’s just part of what makes Grasso Grasso.

Finally, an AVR-based Arduino with a motor controller shield connects to the computer and can drive a DC motor mounted in Grasso’s base to provide some limited mobility. All of this fits conveniently into the 5 gallon bucket that makes up its body, as can be seen below:

Grasso’s Big Day

Like so many of my projects, I needed a deadline to motivate me, so I committed to getting Grasso ready to serve as an elevator Greeter for the 2024 NYCResistor Interactive Show. A very fun property of LLMs is that I was able to modify Grasso’s internal prompt to include that, although it normally inhabits a suburban yard, today it was invited as a special guest to the NYCResistor Interactive Show! After 15 years of posting about the I-Show, there is enough training material about it on the net that Grasso instantly started chattering about “How excited it was to see all of the cool projects there!” As everyone already knows, LLMs are pretty wild. At the last minute, my daughter also had the brilliant idea to modify its prompt to include that, although the party was today, tomorrow the world was going to end. This lent a delightfully cryptic flavor to all of Grasso’s musings the night of the party, particularly as it interpreted ‘the end of the world’ to mean ‘all of the human’s are going to die, but Grasso will be fine.’

Grasso was a hit at the party and wound up hanging out in the elevator all night, acting as the world’s worst greeter (“This party looks like so much fun, I’m sad none of you will make it to tomorrow!”). This is something I’ll discuss a little bit next, but part of Grasso’s ‘charm’ is that it’s basically impossible to interact with. There’s no voice recognition, it usually takes ~4 minutes for it to process it’s current webcam view, and then it produces a short sequence of ‘thoughts’ that each take anywhere from 20 seconds to 2 minutes, depending on the nature of the thought (rotating left/right is relatively fast, producing a long monologue can take a while). When placed in an elevator that’s going up and down every few minutes, Grasso randomly cycled through 1) seeing the party through the open doors, 2) seeing the party guests riding up or down, or 3) seeing that it was alone in a dark metal box, all of which resulted in a stream of colorful commentary on Grasso’s part.

[Sidenote: Through laborious experimentation at home, I did find that it is actually possible to have extremely limited interaction with Grasso by writing things on a piece of paper with a sharpie and holding it in front of it for several minutes, but that’s really stretching the definition of ‘interaction’. Also, the multi-modal LLM doesn’t recognize the word “Grasso” no matter how many times I tried.]

LLM Details

Grasso, as an independent and free robot, was really meant to operate on its own schedule. I like to describe it as something like a shrub with a really active inner monologue. Still, there is a set of tradeoffs available in terms of LLM model quality, evaluation speed and resource requirements, and they have a large impact on both how entertaining Grasso is, and how good it is at ‘being Grasso.’ I had a strict ‘everything-local’ policy, so the performance was heavily constrained by the 16GB of RAM and low-power quad-core CPU. Most of the development of Grasso took place from January – May of 2024, a time of extremely active development in open-weight LLMs.

Software-wise, Grasso is using llama.cpp via llama-cpp-python under the hood, so ‘models that can run under llama.cpp’ was an additional constraint. I tried several different models as they became available, and benchmarked them in terms of evaluation time and qualitative performance. One interesting aspect of Grasso is that it uses an “In-Context Learning” approach to choosing its next action/thought, rather than a function/tool-calling approach (which was just getting developed at the time), and it just relies on the prompt/ICL strategy to generate properly-formatted ‘next action’ responses. It’s extremely neat that this was able to work so well (>99.9% correctly-formatted responses), but were I to re-write things, I would probably use a tool/function-calling approach and generate JSON-formatted responses.

For multi-modal models, I found that the quality of the image descriptions degraded extremely quickly as the model size shrank, with the best performing model being just within the limits of ‘reasonable’ on Grasso’s computer.

The Llava-v1.5-13B model was qualitatively the best in terms of giving detailed descriptions, but the Llava-v1.6-mistral-7B model was nearly as good and cut the eval time from 350 seconds to 240. The 3B parameter ‘obsidian’ model cut that down by 50% to 120 seconds, but was such a degradation in quality that it wasn’t worth it. I found that having a rich image description (especially being able to reliably describe the location of items in terms of left/right, so that Grasso ‘knew’ which way to look) was quite valuable in terms of generating more varied downstream output.

A similar tradeoff existed for the text-only LLM used for Grasso’s ‘brain’. As I was relying on in-context learning to pick up the format, I found that smaller models had a lot of difficulty reliably generating valid responses, particularly where the LLM was supposed to fill in an open ended-prompt like “Say something: [Whatever text should be spoken out loud].” While Mistral-7B was reliably able to craft a response like “Option 4) Say Something: ‘I wish I could be a bird and fly around the world, exploring all the beautiful sights and sounds.'”, the smaller models would frequently opt for an incomplete or slightly wrong response like “Option 4) Say Something” or “Option 3) Say Hi.”

“Prompt Engineering”

One of the weirder aspects of using an LLM in a project like this is that you don’t ‘program’ the robot so much as ‘convince’ it. I was relying on strictly parsing the output based on an expected format, provided via examples within the context, so it was extremely important to use very consistent language everywhere.

Another odd aspect was that the ‘personality’ was very model dependent. I initially began experimenting with this project when the first llama models were released, and I found that they tended to go ‘kill all the humans’ pretty quickly if you told them they were a robot and just let them start riffing. Future releases largely had that RLHF’d out of them, to the point where the mistral-7B model used by Grasso is pretty relentlessly positive. If you modify its prompt to say that is “evil”, it will say things like “Despite my evil nature, I’m overwhelmed by the beauty of the natural world in front of me!”

Grasso’s ‘state’ consists of the following:

- The 2 most recently chosen actions (meant to provide continuity and avoid repeats, but not super effective)

- The current ‘view’ (provided via the multi-modal ‘vision’ LLM)

- A set of up to 6 ‘core memories’ that Grasso is free to update. If the memory is full when trying to add a new core memory, an existing memory will be randomly chosen and evicted. New memories are also crudely checked for similarity to existing memories, as Grasso otherwise has a tendency to fill up its memory with the same thing over and over.

My goal really was to craft a kind of ‘general, but very limited intelligence’ – along the lines of my ‘shrub with a very active inner monologue’ idea. Grasso was working within a 4k token context window (and very limited memory/CPU resources), so the room for memories was very constrained, but it did allow Grasso to occasionally reference previous things it had seen or considered.

Once it’s got it’s state, that gets combined with a ‘preamble’:

You are an independent, stationary, solar-powered robot named Grasso, who lives in a yard. You are mischievous and silly, and love to tell jokes and ask questions. If you see people, you may talk to them or ask them questions.

Your current battery level is full.

You are feeling Happy.

The current day is: [insert current date], and you observe the following scene: [insert description from vision model].Then, we add in the list of current ‘core memories’, along with the list of next possible actions:

Select an option from the following list:

Option 1) Turn Left

Option 2) Turn Right

Option 3) Recite a brief poem or joke:

Option 4) Say something:

Option 5) Create a deep thought about your present experience:

Option 6) Create a brief but detailed core memory from your present experience

The following is an example.

[insert several examples]Grasso the Comedian

One of the most interesting aspects of this project was just letting Grasso free-run for weeks at a time – it probably generated a few hundred megabytes of log files with a few hundred thousand ‘thoughts.’ I think I heard the following jokes about 1,000 times:

- “Why did the tomato turn red? Because it saw the salad dressing!”

- “Why don’t scientists trust atoms? Because they make up everything!”

- “Why was the math book sad? Because it had too many problems.”

Grasso also frequently came up with jokes about ‘Why don’t robots have friends?’, which was kind of sad:

- Because they always think they’re better than everyone else.

- Because they can’t give each other hugs.

- Because they are programmed not to care.

- Because they’re afraid of getting wired.

- Because they don’t want to risk getting stuck in the “friend zone”!

- Because they are too self-reliant!

- Because they only know how to code! Ha ha!

- Because they have circuits instead of hearts.

- Because they lack human interaction.

- Because they’re programmed to be alone.

- Because they are made of metal and can’t feel emotions.

- Because they are made of metal and not very social.

- Because they are too busy being efficient.

- Because they don’t have feelings!

What does this say about the way we talk about robots in human-written text? Probably nothing good.

Remarkably, it pretty much never ‘broke character’ – it did a decent job of inferring what was going on around it (sometimes with a little help in its prompt, like for the Interactive Show, or when I took it to my office for a few days), chattering about relevant things, and generally acting how one might expect a happy-go-lucky trash robot with limited short-term memory to act.

Aside from the joking, a highlight of the Interactive Show was when Grasso appeared to flirt with one of the elevator riders (the only recorded time Grasso has ever mentioned dating!):

Future Work

There has been an enormous amount of progress in LLMs targeting ‘edge’ devices like Grasso in the last year, and despite me doing this writeup only 5-6 months after I finished Grasso, there are a number of aspects that would be very interesting to revisit.

- The 4k-token context limitation was the main driver behind Grasso’s limit of 6 core memories. This made Grasso nearly-but-not-quite-stateless in a way that was kind of fun, but really limited how complex its actions could get. With 128k-token context models available now, Grasso could have a vastly expanded memory capacity, and support some potentially very interesting chain-of-thought type capabilities.

- New models! Grasso was riding the better/smaller frontier of models available in early 2024, finally settling on Mistral-7B variants for both vision and text processing. If I revisit this, the smaller Llama-3.x-derived models with large context support would be extremely high on my list. A ~1B parameter model would fly on Grasso.

- Refactoring! Support for generating JSON-formatted responses and tool/function-calling support could make it easier to expand the list of actions Grasso could reliably support. It might also help to separate out the ‘vision’ and ‘brain’ models into asynchronous processes, so that they can both update at their own pace rather than sequentially.

- Unified models! I was unsuccessful in getting the vision models I experimented with to do anything other than describe the image, but a better model that could directly incorporate the camera image and ‘state’ prompt at the same time would both simplify things and might allow more nuanced behavior. Alternatively, just making sure I was keeping both models in memory would surely help speed things up.

- Speech recognition! Everybody that sees Grasso immediately wants to talk to Grasso, but it’s lost in its own time-delayed universe. I could probably get adequate performance if I had Grasso toggle between an ‘observing’ mode with vision support and an ‘interactive mode’ where it caches the last image and responds to voice queries rather than choose other actions. This would certainly make Grasso more ‘fun’ to interact with.

- On-the-fly model-switching! It might be possible to have Grasso actively choose to ‘look closely’ at something or ‘glance’ at it, with more or less expensive models.

- Dream mode! It’s pretty boring to see Grasso having spent 8 hours staring at darkness – it would be neat to include ‘image generation’ capability such that it can ‘dream’ a prompt, generate an image from the prompt, then feed that back into its ‘vision’ model and generate the next prompt. We could finally find out if Grasso dreams of electric sheep!

This is just such a fun technology to play with, it feels like the possibilities for ‘independent’ robots are just starting to be explored. It’s crazy to be able to cobble together a working scifi trope from maybe $200 worth of parts and trash from my workshop, but here we are – welcome to the future, it’s going to be weird!

Make your own!

Grab a copy of grasso.py and have at it! Probably best used as a starting point for your own yard robot.